To fully grasp the complexities of Search Engine Optimization (SEO), one must comprehend its multifaceted nature. SEO extends far beyond merely improving a website's ranking on Google; it encompasses strategies for attracting and retaining diverse traffic sources, enhancing brand recognition, and deeply understanding user search intent. Let's delve into specific strategies and tools to explore these aspects in depth.

- Diversification of Traffic Sources

In the realm of SEO, relying on a single traffic source can be risky. Modern SEO strategies necessitate attracting traffic through various channels, including social media, email marketing, and direct traffic. This diversified approach ensures that even if search engine algorithms change, websites can maintain stable visitor numbers. Notably, Google's Chrome browser and direct URL visit data enable more precise tracking of website traffic. Therefore, businesses should expand their traffic sources, particularly by increasing brand exposure through social platforms.

- Strengthening Brand and Domain Recognition

Brand awareness directly impacts SEO. Users are more likely to click on familiar brand websites in search results. By consistently optimizing rankings for a variety of long-tail keywords, brands can effectively increase their visibility. Simultaneously, improving "site authority" is a crucial pathway to enhancing brand influence. Through the continuous output of high-quality content and precise SEO optimization, businesses can secure more advantageous positions in search engines, thereby boosting their online brand impact.

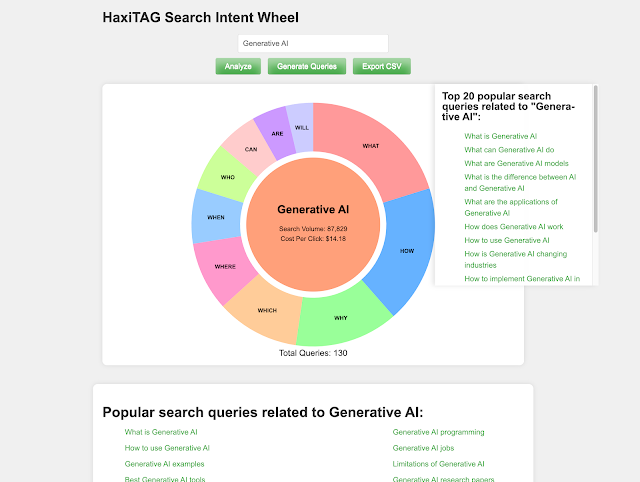

- Deep Understanding of Search Intent

Comprehending user search intent is key to formulating successful SEO strategies. By utilizing tools like Semrush and SimilarWeb, one can analyze user visit sources and behaviors. This aids in tailoring website content to better meet user needs, thereby increasing user stickiness. Businesses should focus on becoming the "ultimate destination" in users' search paths, ensuring comprehensive and practical content. Google, by tracking search sessions, can more accurately understand user needs and adjust search rankings accordingly.

- Optimizing Titles and Descriptions to Increase Click-through Rates

Titles and descriptions form the first impression users have in search results. Optimizing these elements can significantly improve click-through rates. By capitalizing keywords or enhancing visual appeal, pages can stand out among numerous search results. Moreover, title optimization not only affects click-through rates but also directly relates to page rankings. Thus, businesses should ensure attractiveness and relevance when crafting titles.

- Evaluating the Effect of Hidden Content

Using accordions or other forms to hide content may affect a page's bounce rate. When users struggle to quickly find needed information, it can lead to negative click signals. Businesses should regularly evaluate the performance of these pages to ensure hidden content doesn't adversely affect user experience. Through rational page layout and smooth navigation design, overall user experience can be enhanced, thereby reducing bounce rates.

- Optimizing Page Layout and User Interaction

Website layout and user interaction are other crucial factors for SEO success. Clear page structure and smooth navigation can enhance user experience, prolonging the time users spend on the site. By optimizing homepage design and navigation systems, businesses can ensure users easily find required information, thereby improving overall page rankings. Enhancing user interaction can emit positive signals, boosting the SEO performance of the entire website.

- Deepening Rather Than Broadening Content

In content strategy, updating and deepening existing content is often more effective than creating new content. By using the "ContentEffortScore" to assess content creation difficulty, one can ensure each document is of high quality. Adding high-quality images, videos, and unique content can significantly improve a page's SEO performance. Ensuring consistency between titles and content, and using techniques like text vectorization to analyze topic relevance, are other important strategies for enhancing SEO.

- Building High-Quality Backlinks

Backlinks are a crucial signal in SEO, especially those from high-traffic or authoritative websites. Businesses should focus on obtaining high-quality backlinks from sites in the same country and with relevant content, avoiding low-quality or "toxic" links. Furthermore, when evaluating link value, one should consider not only the anchor text itself but also the naturalness and fluency of its surrounding context.

- Emphasizing Author Expertise

Google increasingly values content expertise and authority. Therefore, showcasing authors' professional backgrounds and credibility has become particularly important. A few highly qualified authors often outweigh numerous low-credibility ones. By enhancing author authority and expertise, businesses can effectively improve their content rankings in search engines.

- Utilizing Web Analytics Tools

Finally, businesses should fully utilize tools like Google Analytics to track and analyze user interaction data, promptly identifying issues and optimizing SEO strategies. Particularly for pages with abnormally high bounce rates, businesses should investigate the causes in-depth and take measures to improve.

(HaxiTAG search query intent analysis)

Additional Key Points:

- Deepen Rather Than Broaden Content: Updating and enriching existing content is often more effective than constantly creating new content. The "ContentEffortScore" assesses document creation difficulty, with high-quality images, videos, tools, and unique content all contributing positively.

- Title and Content Consistency: Ensure titles accurately summarize subsequent content. Utilize advanced techniques like text vectorization for topic analysis, which is more precise in judging title-content consistency than simple vocabulary matching.

- Leverage Web Analytics Tools: Use tools like Google Analytics to effectively track visitor interactions, promptly identify issues, and resolve them. Pay special attention to bounce rates; if abnormally high, investigate the causes in-depth and take measures to improve. Google achieves deep analysis through data collected via the Chrome browser.

- Focus on Low-Competition Keywords: Initially prioritize optimizing for keywords with less competition, making it easier to establish positive user signals.

- Build High-Quality Backlinks: Prioritize links from the latest or high-traffic pages in the HiveMind, as they transmit higher signal value. Avoid linking to pages with low traffic or engagement. Additionally, backlinks from the same country and with relevant content have advantages. Be wary of "toxic" backlinks to avoid damaging scores.

- Consider Link Context: When evaluating link value, consider not only the anchor text itself but also the natural flow of the surrounding text. Avoid using generic phrases like "click here" as their effectiveness has been proven poor.

- Rational Use of the Disavow Tool: This tool is used to block undesirable links, but leaked information suggests it is not directly used by the algorithm and is more for document management and anti-spam work.

- Emphasize Author Expertise: If using author citations, ensure they have good external reputations and possess professional knowledge. A few highly qualified authors often outperform numerous low-credibility authors. Google can assess content quality based on author expertise, distinguishing between experts and non-experts.

- Create Unique, Practical, and Comprehensive Content: This is particularly important for key pages, demonstrating your professional depth and providing strong evidence to support it. Although external personnel can be hired to fill content, without substantial quality and professional knowledge support, it's difficult to achieve high ranking goals.

Through the comprehensive application of these strategies, businesses can effectively enhance their website's SEO performance, attract a broader readership, and gain an advantageous position in fierce market competition. SEO is not merely the application of technology, but a profound understanding of user needs and continuous improvement of content quality.

Related Topic

How Google Search Engine Rankings Work and Their Impact on SEO - HaxiTAGUnveiling the Secrets of AI Search Engines for SEO Professionals: Enhancing Website Visibility in the Age of "Zero-Click Results" - GenAI USECASE

The Deep Integration of Artificial Intelligence and SEO: Unveiling the Path to Future Search Engine Optimization Competitive Advantage - HaxiTAG

10 Noteworthy Findings from Google AI Overviews - GenAI USECASE

Navigating the Competitive Landscape: How AI-Driven Digital Strategies Revolutionized SEO for a Financial Software Solutions Leader - HaxiTAG

Strategic Evolution of SEO and SEM in the AI Era: Revolutionizing Digital Marketing with AI - HaxiTAG

Maximizing Market Analysis and Marketing growth strategy with HaxiTAG SEO Solutions - HaxiTAG

Unveiling the Secrets of AI Search Engines for SEO Professionals: Enhancing Website Visibility in the Age of "Zero-Click Results" - GenAI USECASE

Enhancing Business Online Presence with Large Language Models (LLM) and Generative AI (GenAI) Technology - HaxiTAG

Harnessing AI for Enhanced SEO/SEM and Brand Content Creation - HaxiTAG